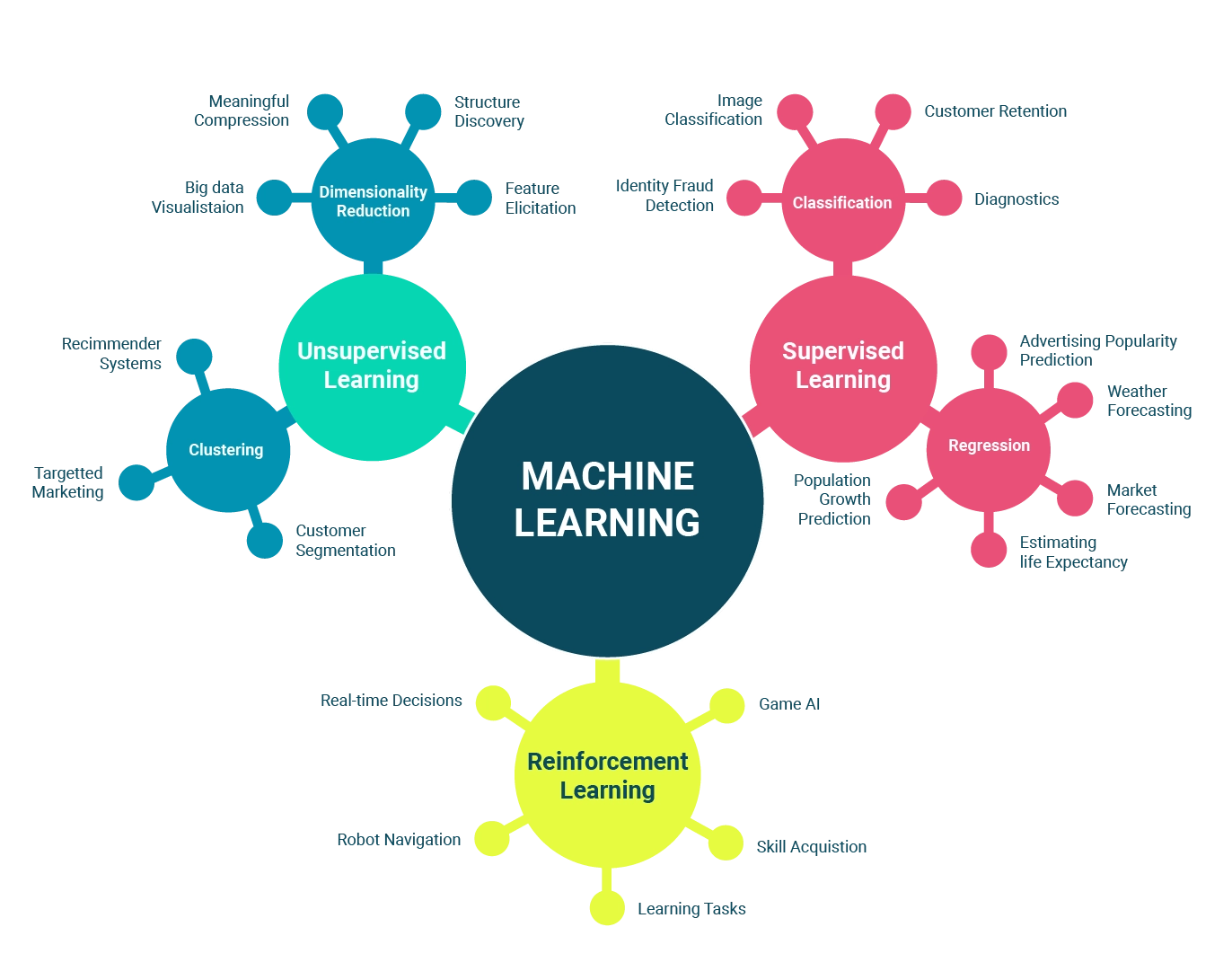

Recent innovations in artificial intelligence have led to significant advances in the processing capacity and performance of AI systems. Key innovations such as Deep Learning, Reinforcement Learning and Transfer Learning have revolutionized the field of AI and paved the way for a new era of applications and technological advances. In this context, future developments are expected to focus on natural language understanding, computer vision, and content generation.

- Natural Language Understanding (NLP): NLP is a rapidly evolving field that aims to improve communication between machines and humans. With the advent of advanced language models such as OpenAI's GPT-3, advances in NLP are expected to lead to more natural and sophisticated interactions between users and AI systems. In addition, NLP will have a major impact on content generation, sentiment analysis, and machine translation applications.

- Computer vision: Computer vision is another rapidly growing field, with significant advances in the ability of machines to recognize and interpret images and videos. Thanks to Deep Learning and techniques such as convolutional neural networks (CNNs), major advances are being made in image segmentation, object recognition, and the development of artificial intelligence systems that can "see" the world like humans. This progress could lead to a wide range of applications, from autonomous driving to AI-assisted medical diagnosis.

- Content Generation: Content generation via AI is gaining more and more ground, with the creation of high-quality text, images, video and audio through the use of advanced algorithms. For example, Generative Adversarial Networks (GANs) have been used to generate realistic images and videos, opening up new opportunities in the entertainment and advertising industries.

Some of the most promising public and private research centers in the field of AI include:

- OpenAI: Founded by Elon Musk, Sam Altman and other leading entrepreneurs, OpenAI is dedicated to the research and development of advanced and secure artificial intelligence, with the goal of ensuring that the benefits of AI are shared by all of humanity.

- DeepMind: Acquired by Google in 2014, DeepMind is known for developing Reinforcement Learning algorithms such as AlphaGo and AlphaZero, which have defeated world champions in complex games such as Go and chess.

As we have seen, artificial intelligence has led to significant advances in various fields, but it is critical to ensure that its use is responsible and the benefits are shared equitably. Key ethical and social issues include inclusion, overcoming ethical bias, and data protection.

- Inclusion: Reducing the digital divide and providing equitable access to AI technologies are crucial to ensuring that everyone can benefit from advances in AI. This involves improving training, education, and access to digital infrastructure, especially in disadvantaged and developing communities. In addition, it is important to ensure that people with disabilities can benefit from AI technologies, for example, through the development of assistive devices and accessible interfaces.

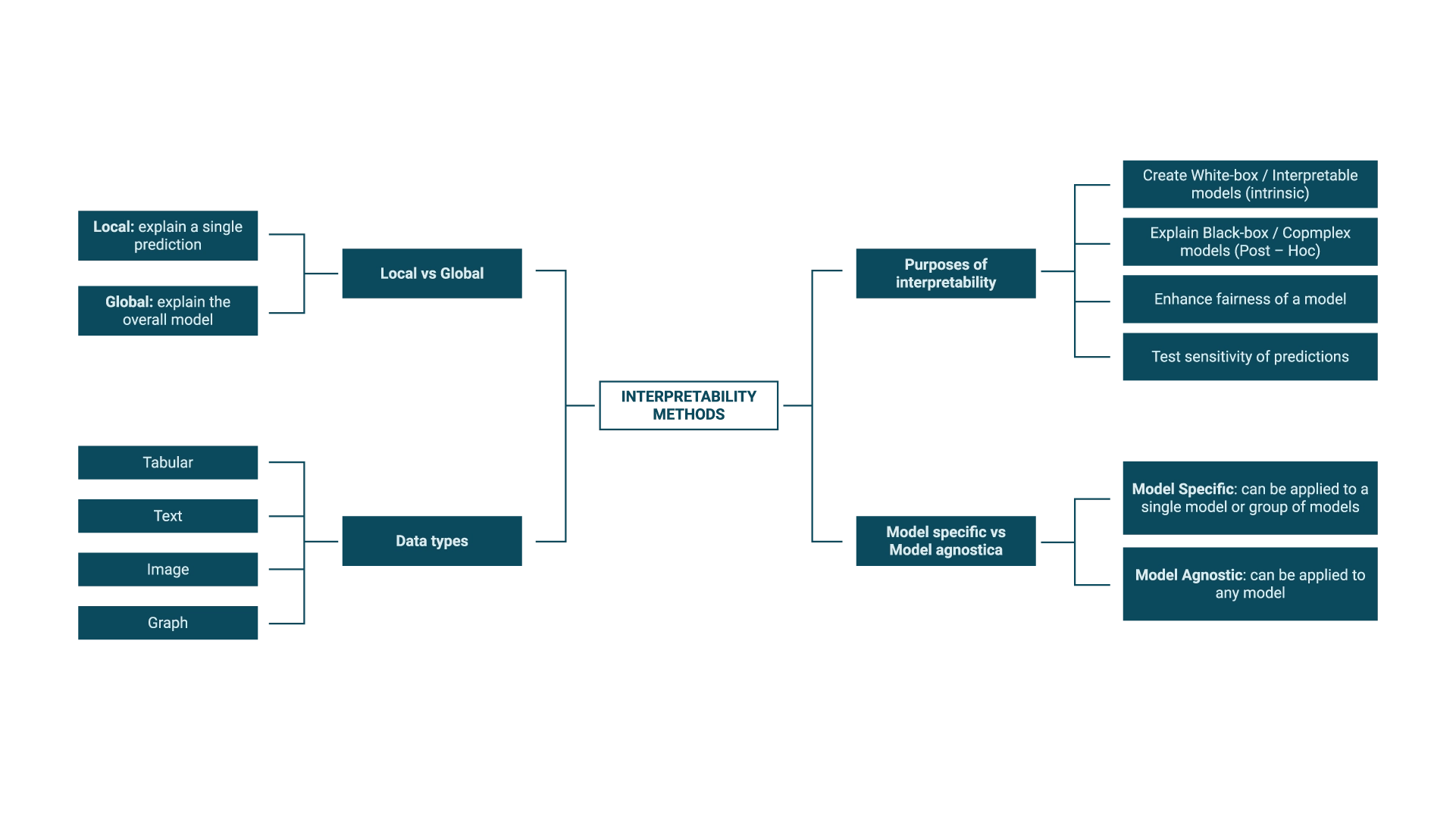

- Ethical Bias: AI algorithms can incorporate and perpetuate biases and discrimination present in the data they are trained on, leading to unfair outcomes and inequalities. To address this problem, it is necessary to promote research and development of unbiased and responsible algorithms that take into account ethical implications. In addition, it is essential to encourage diversity and inclusion among AI researchers and developers to ensure that diverse perspectives are represented in the development of these technologies.

- Data protection: The collection and analysis of massive amounts of personal data by companies and organizations using AI raises concerns about data privacy and security. It is critical to establish and enforce data protection standards and privacy regulations that ensure that users have control over their data and that the organizations that manage it are accountable.

Other considerations include the impact of AI on the labor market and the skills required AI workers. Automation and AI may cause the disappearance of some jobs and the creation of new job opportunities, requiring retraining of skills and adaptation of educational and vocational training systems.

In summary, addressing the social and ethical impacts of AI requires a holistic approach that promotes inclusion, combats ethical bias, and ensures data protection, as well as preparing the workforce for emerging challenges and opportunities.

Artificial Intelligence and Machine Learning are transforming our world in surprising and unpredictable ways. Through their powerful learning and adaptive capabilities, these systems are changing the way we work, communicate and solve complex problems. As we continue to explore and harness the potential of these technologies, it is essential that we do so in a way that is responsible and mindful of the ethical and societal challenges that lie ahead.