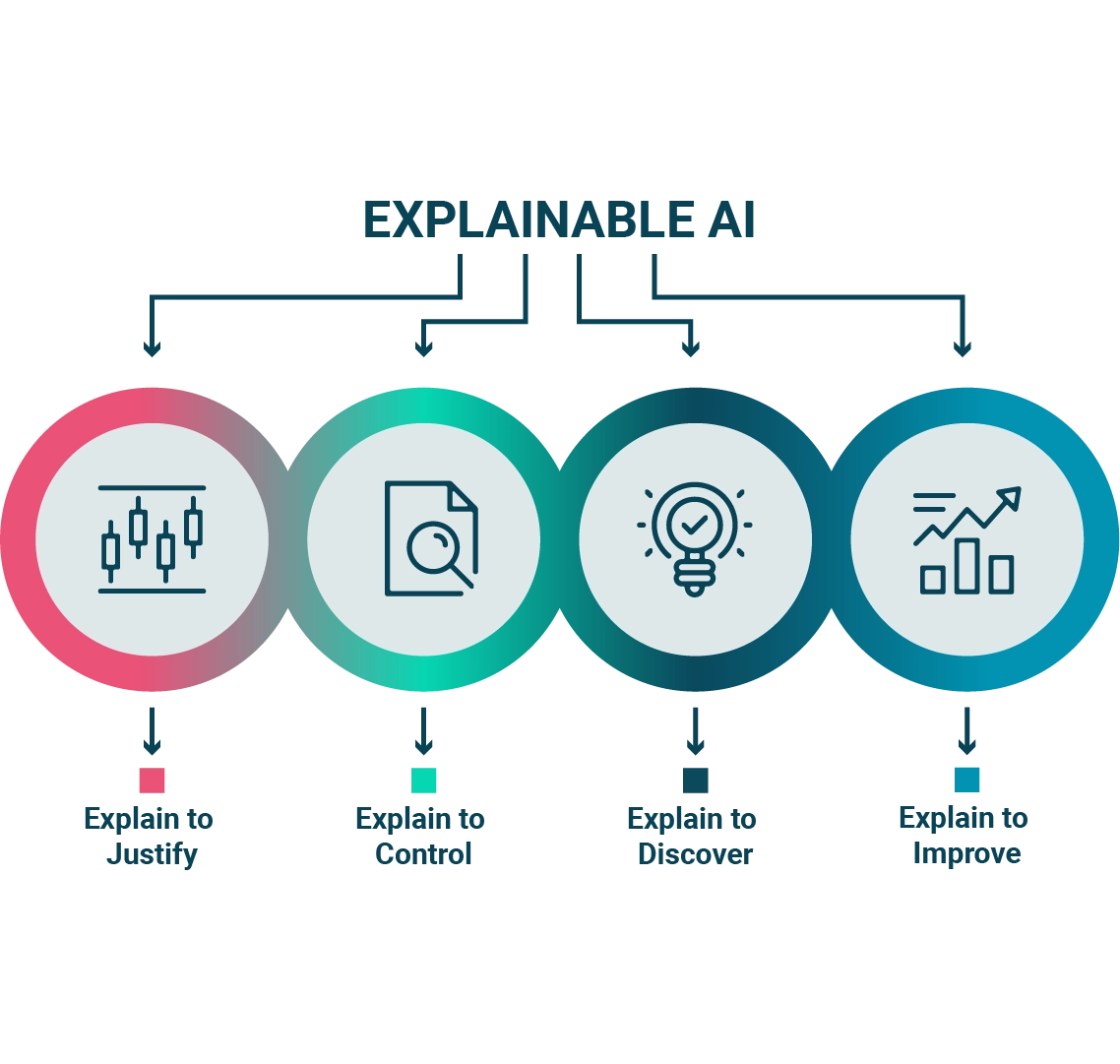

Artificial intelligence (AI) is one of the most promising technologies of our time. However, many people are still skeptical about its adoption because of the lack of transparency in decision-making processes. For this reason, Explainable AI (XAI) technology, which aims to make artificial intelligence decisions transparent, is becoming increasingly important. In this article, we will discuss how XAI can help overcome mistrust regarding the adoption of artificial intelligence and what its benefits are.

It is an emerging technology that aims to solve this problem by providing an explanation of how AI reached a particular decision. So let's go over how XAI can overcome misgivings about AI adoption and how it can help make this technology clearer.

XAI has several advantages:

The XAI uses several techniques to provide an explanation of how the AI reached a particular decision. Some of these techniques include:

The XAI has numerous applications in various fields. Some of these applications include:

Despite the many benefits, there are also some limitations. Some of these include:

In conclusion, Explainable AI can help overcome mistrust about AI adoption. The XAI can make AI decision making more transparent, increase people's trust in AI, and improve AI reliability. In addition, XAI has numerous applications in various fields. However, there are also some limitations that need to be considered. Nevertheless, XAI is an important step forward in AI adoption and could have a significant impact on our daily lives.