A new paradigm, which has recently appeared on the technological landscape of network architectures, aims to extend the characteristics of the Cloud, making the resulting model particularly suitable for the execution of services and applications with high requirements.

Moving resources to a point in the network closer to data production is the solution this paradigm proposes to compensate for the high latencies that prevent the execution of applications that require near-real-time timing and generate high data traffic.

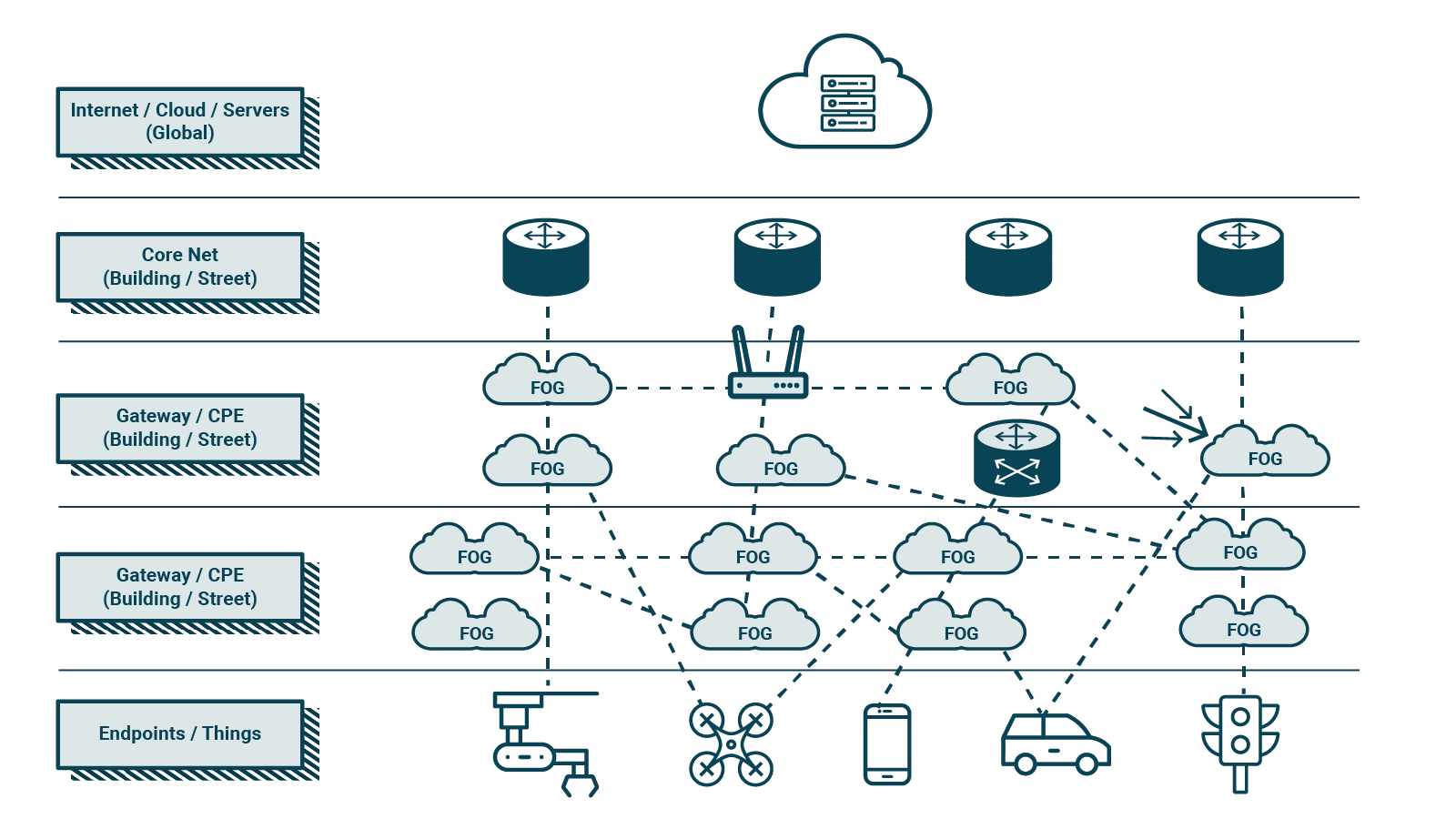

The three-tier Fog/Edge Computing model inserts usable resources at the edge of the network, similar to the resources made available by the Cloud in the core network. The advantages of adopting such a model include low latency, support for mobility, and the ability to extract and use contextual information from users. Part of the infrastructure on the edge, dedicated to the performance of services, relies on the Cloud for complex computations and heavy system state backup. The resulting model is the enabler that will enable the existing infrastructure to fulfil the demands of modern applications, especially IoT applications, which need to process data close to the source in order to minimise latency and avoid frequent connections to the Cloud, thus also containing the network traffic generated. The wireless exchanges will themselves be able to host the resources required for the continuous micro operations previously delegated to the Cloud, so that they can provide low latency to all those applications that need a real-time response regarding the analysis of the large amount of data they collect and send over the network.

The motivations that have moved companies to adopt the Edge Computing paradigm are many:

- Speed: Edge Computing makes de facto possible the only IT architecture that allows multiple devices to communicate by exchanging data in real time. Reducing latency in operations would be impossible with the simple use of cloud computing, especially in the context of mobile applications. In fact, as we shall see, the condition to be favoured in order to fully exploit Big Data & Analytics logics comes from being able to effectively combine the strengths of the cloud and the edge in a functional seamless solution.

- Scalability: Edge Computing makes it possible to free up centralised resources for operations close to the data, which is reflected in greater agility in the configuration of IoT systems, whose expansion is no longer exclusively tied to the scalability of cloud resources, but is made possible by the simple increase of computing devices and micro data centres located at the edge of the network. Thanks to edge computing, a double level of resource scalability is thus achieved, both locally and in the cloud. The most significant burden is the expertise required to configure a cloud-to-edge architecture capable of optimally balancing the computational demand required at the various functional layers of the system.

- Resilience: Edge Computing reduces the amount of data from the primary network, favouring data availability at the edge of the network. This data management model makes the work pipeline less prone to downtime risks than a cloud data centre, as operationally useful data is available on the device itself, rather than on a local micro data centre. In other words, in order to guarantee the resilience of a system based on edge computing, it is sufficient to provide for a condition of periodic synchronisation between local data and cloud data storage, which can be updated much less frequently than operationally necessary.

- Security: In some respects, this might seem a paradox, as the design of an edge architecture disproportionately increases the vulnerability points of a system, due to the plurality of nodes and gateways that correspond to the various access points that can be breached by an attack from the network. This condition, if properly exploited, is at the same time a considerable asset for cyber security experts, who can isolate the various areas of the network to neutralise them in the event of malicious incidents. Security protocols can in fact be activated to isolate the various elements that make up the entire system: from the single terminal to entire functional compartments